16 Old-School Ingredients That Are Straight Up Unhealthy

One of the hardest things about trying to stay healthy is that what is healthy always seems to be changing. This isn't necessarily a bad thing; new research gives us better insight into how to best care for our bodies. However, it can be frustrating when you've finally found a balanced diet that works for you, only to find out that, surprise! This thing you love is actually killing you.

Take eggs, for example. They were a staple in the human diet for thousands of years, and then in the '60s, the American Heart Association (AHA) suggested people reduce their intake of eggs because of cholesterol. Then, new evidence indicated that eggs are fine... until 1995, when the AHA doubled down on restricting egg consumption. Today, eggs are considered fairly healthy, so long as you eat them in moderation.

With all this confusion around one staple food, it's easy to see how people in the past may have assumed something was healthy — or at least not unhealthy for them — only to be proven wrong later. We've highlighted some old-school ingredients that turned out to be just that below. Be warned: It gets a little gruesome near the end.

1. Lard

Lard is usually made from pig fat, which is then treated to create a smooth, white, semi-solid liquid. However, a few things cause it to fall out of style. Upton Sinclair published "The Jungle" in 1906, describing meat-packing plants and the pig factories where lard was made. The unsavory description of what happened to workers who fell in the vats ("When they were fished out, there was never enough of them left to be worth exhibiting") put a lot of people off the substance.

At the same time, the second Industrial Revolution was in full swing. During this time, butter and oil started to be sold in stores, and an American chemist developed a cheaper lard alternative called shortening.

While there are benefits to using lard for certain baked goods, it's high in saturated fat and cholesterol. Additionally, it can retain a pork flavor. This can work in your favor for some dishes, but probably not for a blueberry pie.

2. Butylated hydroxyanisole

Multiple food additives are banned in Europe but not in the U.S., and one of those is butylated hydroxyanisole, or BHA. BHA is a preservative used in cured meats and other types of foods, as well as things like rubber, plastic, and glue. It first hit the market in the 1940s, and unfortunately, some U.S. manufacturers still use it today.

Studies have shown that BHA is likely carcinogenic and may cause other health problems as well — so why is it banned in the EU but not the U.S.? It has to do with how the different administrations handle food bans. In the EU, something is banned if there is evidence to suggest it might be harmful. In the U.S., the Food and Drug Administration (FDA) only bans foods if evidence proves that it's harmful. Although BHA is still used in foods today, the FDA now limits how much manufacturers can use.

Its use has also decreased as people have become more aware of what's in their food. Additionally, new, more natural alternatives are being introduced, which will hopefully do away with BHA for good.

3. High-fructose corn syrup

Nearly 400 million metric tons of corn is produced in the U.S. per year. There are several reasons for this, but primarily, it's because it's an easy crop to grow and there are millions of dollars in subsidies available for corn growers. These subsidies started in 1933, and we've been pumping out insane amounts of corn ever since. People don't eat nearly the amount of corn we produce, so the U.S. had to start finding other uses for it.

One of those came about in 1957 with the invention of high-fructose corn syrup (HFCS).While some studies show that HFCS isn't all that different from typical sugar, others suggest that it adds excess fructose into the diet, which can increase risks of health problems.

Many companies still use high-fructose corn syrup in their products today, although there are several that are reverting back to using real sugar as consumers have become more health-conscious. According to the U.S. Department of Agriculture (USDA), the use of HFCS has been declining steadily in the U.S. since its peak in 1999.

4. Titanium dioxide

Although titanium dioxide was discovered in the late 18th century, it wasn't until after World War II that manufacturers started adding it to foods to brighten whites and enhance color. It's been used in things like candy, salad dressing, chewing gum, and ice cream. For many years, scientists have been raising concerns about its safety.

Recent studies show that titanium dioxide may have the ability to damage DNA. It may also have the ability to disrupt the endocrine system, which is the system in our bodies that produces and releases hormones. This can lead to health problems like diabetes and obesity.

The European Union banned titanium dioxide in 2022. While still legal in the U.S., it's now under intense scrutiny. The FDA is currently reviewing a petition to repeal the authorization of titanium dioxide in food. Until then, it requires that the quantity of titanium does not exceed 1% by weight of the food.

5. Azodicarbonamide

If you were on the internet in 2014, you may remember there being a big brouhaha because Subway used a chemical in its bread that was also found in yoga mats. That chemical was azodicarbonamide (ADA), a substance developed in the 1960s that helps improve dough elasticity and texture — and yes, it's also a chemical used in yoga mats and other plastic items.

There's a tiny health risk that comes along with azodicarbonamide, however, and by tiny, we mean potentially major. During the bread-making process, azodicarbonamide breaks down into two substances: semicarbazide and urethane. Semicarbazide has been shown to cause cancer in mice, and the World Health Organization (WHO) determined that urethane may cause cancer, though it admits further study is needed.

Subway was a little unfairly maligned; several other fast food chains, including McDonald's, Chick-fil-A, Wendy's, White Castle, and Jack in the Box, also used azodicarbonamide in their breads. These brands have all since removed azodicarbonamide from their ingredient lists, though the chemical isn't banned in the U.S. It is, however, banned in certain states, like New York, and in Europe and Australia.

6. Olestra

The diet fads of the '90s and early aughts were wild, and perhaps nothing demonstrates that quite like olestra. Olestra is a fat substitute with zero calories, zero cholesterol, and zero fat — because your body can't digest it. It was popularly used in snack foods, like Frito-Lay's WOW! chips.

The FDA stated that Olestra was safe in small doses, but the problem with snackfood is that people often don't eat it in small doses. This led to Olestra causing two problems in the body. First, it would absorb nutrients, leaving snackers deficient in certain vitamins and minerals. Second, it caused a lot of gastrointestinal distress, including stomach cramps, diarrhea, and anal leakage.

Olestra ended up being banned in Europe but not the U.S., with the FDA still insisting that it's safe in small doses, though it does require foods with Olestra to be fortified with extra vitamins and minerals. Despite the FDA still approving Olestra, most manufacturers have stopped using it entirely.

7. Potassium bromate

Azodicarbonamide isn't the only bread additive that's been shown to potentially have negative health effects. Another is potassium bromate, a chemical additive that strengthens dough and helps it rise better. It was discovered in the '60s and is used in bread, as well as other dough-based foods like flour tortillas, soft pretzels, pizzas, bagels, and crepes. It can also be added to flour.

The problem with potassium bromate is that it can cause cancer. Studies have shown that in rats given potassium bromate, the substance caused and accelerated the growth of cancerous tumors.

Is it dangerous to humans? The answer is... maybe. That "maybe" was enough for the European Union, the United Kingdom, Canada, Brazil, and several other countries to ban the additive, but not the U.S. It's still allowed here, but in very tiny doses, although the FDA has not reviewed the safety of potassium bromate since 1973.

8. Unpasteurized milk

We've talked before about the dangers of raw milk, which include possible contamination with blood and fecal matter and germs like salmonella, E. coli, listeria, and campylobacter. It even carries a tuberculosis risk.

Back in the before-times, people had no choice but to drink unpasteurized milk because pasteurization hadn't been invented yet. That all changed when Louis Pasteur created his eponymous process in 1864. By 1911, major cities like New York City and Chicago had mandated pasteurization for commercially sold milk. By the '20s, it had become fairly standard, and after new techniques and processes for pasteurization were developed in the late '70s, raw milk became rare.

That being said, it's not banned in the U.S., since it's kind of hard to ban people from drinking what their own livestock produces. However, the FDA has banned the interstate sale of the stuff, and individual states have their own regulations for raw milk. Over a dozen ban its sale, while about a dozen more only allow its sale on farms.

9. Propylparaben

Keeping food fresh has always been pretty important for humans. If fruit goes bad, it gets covered in mold, and if potatoes go bad, you could end up extremely sick with solanine poisoning. Over the centuries, we've come up with tons of ways to keep our food from spoiling, and in 1924, scientists stumbled upon parabens. Parabens — including propylparaben — are artificial compounds that are good at preventing the growth of mold, bacteria, and yeast.

By the 1950s, the use of propylparaben as a preservative became widespread in baked goods and packaged foods. However, more modern research has raised some red flags about propylparaben and parabens in general. It affects the endocrine system, which controls hormones. Research has found that it can alter reproductive and thyroid levels, which may reduce fertility and damage ovarian and uterine function.

Current studies on the effects of propylparaben are limited, but the EU found cause to ban it in 2006. Though still approved by the FDA in the U.S., many companies have vowed to remove or continue to not use parabens in their products.

10. Certain food dyes

The FDA's ban on red dye No. 3 is only the most recent in a long history of artificial food dye bans. Some of the earliest bans were enacted in 1956, with orange No. 1 being banned for causing gastrointestinal issues in children, and red No. 32 being banned due to research suggesting it could cause organ damage and cancer. Other potentially carcinogenic dyes that are now banned include red No. 1 and 2, violet No. 1, and green No. 1, while others have been banned for causing health issues like intestinal lesions, heart damage, and damage to the adrenal cortex.

As a result, there are now only six artificial dyes that are approved for use in the U.S.: green No. 3, red No. 40, yellow No. 5 and 6, and blue No. 1 and 2. The FDA is working to eliminate these from the market as well, and in the meantime, several states have gone ahead and banned them on their own.

11. Artificial trans fat

Earlier, we talked about the lard alternatives created during the second Industrial Revolution. Shortening was one of those — a type of artificial trans fat, which is made when liquid oils are treated with hydrogen gas to make them solid or semisolid. The most common of these was partially-hydrogenated oil. These fats were cheap to make, and consumers believed them to be healthier than animal-based fats. For these reasons, manufacturers started using them in products like baked goods, snack foods, and spreads. It was also a common choice for deep-frying foods.

Although foods made with artificial trans fats were popular in the '70s, this was about the time that people started to suspect that it may not be as healthy for you as they first thought. By the 1990s, it was abundantly clear that artificial trans fats not only raised levels of LDL, or "bad" cholesterol, but they also lowered levels of HDL, or "good" cholesterol. This increases the risk of conditions like heart disease, diabetes, and dementia. In 2015, the FDA issued a ban on partially-hydrogenated oils in the food supply, giving manufacturers three years to eliminate the ingredient in their products.

12. Cyclamate

It's easy to think about artificial sweeteners as a more modern thing, but the first artificial sweetener — saccharin — was discovered in the late 1800s. Cyclamate came around in the 1950s and became incredibly popular because it was 30 to 40 times sweeter than sugar, but had no calories. Manufacturers also liked it because it was stable at high temperatures and water-soluble. By the '60s, it was being used primarily in sodas, but also a variety of other foods like canned fruit, desserts, and salad dressings.

Unfortunately, it didn't take long for red flags to pop up. By 1964, there were whispers that cyclamate caused health problems like diarrhea and interfered with the way the body processes medications. In 1969, lab tests found that high doses of cyclamate were causing bladder tumors in rats. Shortly after, the FDA banned cyclamate from the market. Today, there are still artificial sweeteners on the market, like aspartame, sucralose, and Stevia, but cyclamate remains banned.

13. Lead acetate

In Ancient Rome, people didn't have access to the wide variety of sweeteners we have today, so they used something called sapa to sweeten their wine. The Romans made sapa by boiling unfiltered grape juice in lead pots, which then leached lead acetate into the juice. Lead acetate is a sweet-tasting salt.

Today, of course, we know of the wide range of problems associated with chronic lead exposure. As a neurotoxin, it can cause significant damage to the brain, potentially leading to a generation of serial killers, or, say, the toppling of one of the world's greatest empires. Lead poisoning can also lead to other problems with the central nervous system and health conditions.

While it's been a while since lead acetate was used as a sweetener, up until a few years ago, it could be used in hair dye. Today, the FDA bans its use in food and cosmetics, and it's mainly used in some industrial processes.

14. Cocaine

There are tons of widely-accepted facts that just aren't true. You don't have to wait an hour after eating to go swimming, and the idea that MSG is bad for you is a longstanding myth rooted in racism. One fun fact that is true, however, is that Coca-Cola did indeed use to contain actual cocaine.

To understand how hard drugs became common in soft drinks, we have to hop back to the Victorian era. At this time, the indigenous peoples of South America had been using the coca leaf for centuries. In 1860, a German chemist named Albert Nieman isolated cocaine from coca leaves. Within a few decades, pharmaceutical companies started using it in things like cough syrups and pain medication.

John Stith Pemberton, a pharmacist, created Coca-Cola in 1886. His drink was a mix of cocaine and a sugary syrup, and it was a big hit — but if people liked it so much, why did they take the cocaine out? Well, once again, it comes back to racism. Initially, Coca-Cola was sold only at racially segregated soda fountains. Once they started bottling it, people of color had access, too. With growing fear over the made-up boogeyman of the "Negro cocaine fiend," the company removed cocaine from its products in 1903.

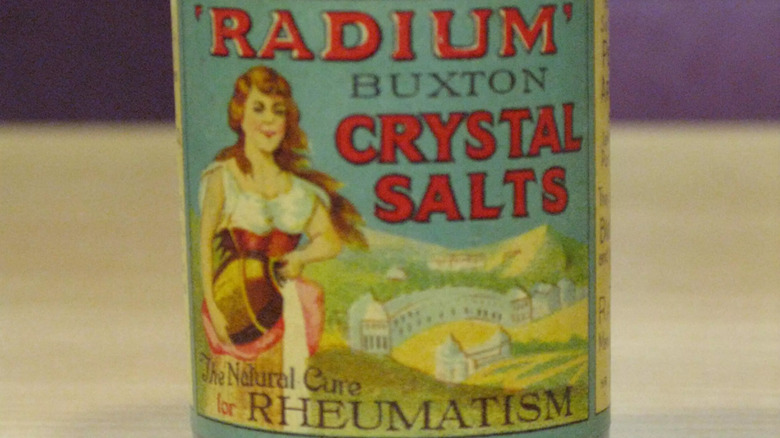

15. Radium

Marie Curie was one of the first to establish radiation protections in the lab and raised concerns about the way people were using radium so casually. Curie discovered radium in 1898, and just a few years later, the U.S. entered into what would later be called the "radium craze" or "radium fad." The most famous victims of the radium craze are the Radium Girls, but they were far from the only victims. In 1904, L.D. Gardner started patenting his "radium health water" called Liquid Sunshine, and it didn't take long for other companies to follow suit.

Today, of course, we have a better understanding of the horrors of radium. For example, the body can confuse radium for calcium and incorporate it into the bone structure. As the radium breaks down, it emits alpha particles, causing bone necrosis and bone cancer. Not exactly what you want your health tonic to do. By the 1930s, the radium craze had subsided, thanks, in part, to the scandal of the Radium Girls. Today, it serves as a cautionary tale against unproven health trends.

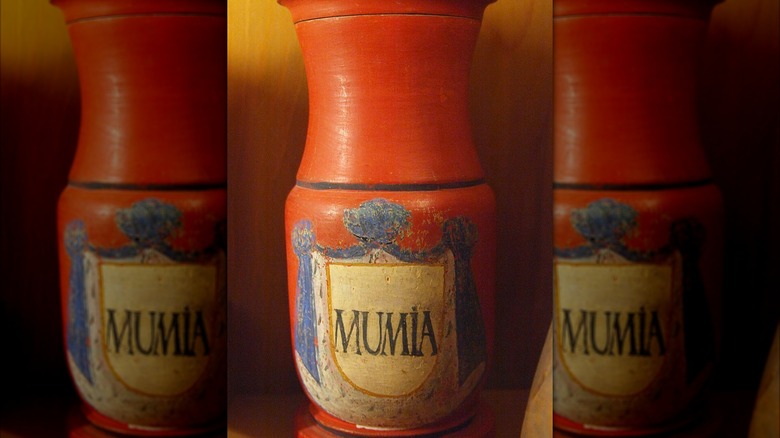

16. Mummies

If the entry on radium should tell you anything, it's that people will go to great lengths to cure what ails them. That includes turning to something called "medical cannibalism," where medical remedies are made using human flesh and blood. See where this was going?

Now, humans had been munching on dead bodies for a while before mumia came along in the 12th century. Mumia was a powder made from ground mummies that was prescribed for all kinds of things, like heart attack and wound care. Obviously, we know now that eating humans is really not healthy. It's a great way to contract diseases, not all of which can be eliminated by cooking — just ask the Fore people of Papua New Guinea, who fell victim to a prion disease called Kuru. Additionally, mummies were preserved with many different substances, not all of which are edible.

Although medical cannibalism began to wane, interest in mummies saw a renewed surge in Victorian England, where mummy unwrappings became a popular pastime. This, and the use of mumia, didn't die off until the end of the 19th century.